Advanced usage¶

Binocular NIR Liveness Detection¶

1.Camera requirements¶

In order to improve the effect of binocular liveness detection, the algorithm has certain requirements for infrared camera and infrared fill light. Need to select an infrared camera with clear imaging to control the infrared fill light Intensity, to prevent two extreme conditions of infrared image is too bright or too dark.

index |

requirements |

|---|---|

WDR |

>= 80db |

SNR |

>= 50db |

White balance |

suggest open |

FPS |

>20fps |

Format |

YUV/MJPG |

Resolution |

>= 480p |

Binocular distance |

<= 3cm |

Normal infrared exposure

Infrared overexposure(unsuitable)

Infrared low exposure(unsuitable)

2. Index¶

There are several standard indicators for liveness detection, as follows:

False attack accept rate: If 0.5%, it means 1000 attack requests, there will be 5 times because the liveness score is lower than the threshold is accepted by mistake.

Pass rate (TAR): If 99%, it means 100 real person requests, there will be 99 passes because the liveness score is higher than the threshold.

Threshold: Above this value, it can be judged as a living body, 0.4 is recommended

3. Usage¶

//Liveness detection (face frame corresponding to bbox color camera)

float score = dfaceNIRLiveness.liveness_check(color_box, ir_box);

4. Android native Camera API binocular configuration¶

Please communicate with the development board manufacturer whether the native Camera API of the Android firmware supports opening two camera previews at the same time. By default, Android 5.1 and earlier versions support a maximum of one camera preview at the same time. If you do not support binocular preview, you need to recompile the HAL hardware layer.

Modify #define CAMERAS_SUPPORTED_SIMUL_MAX 1 to 2 in CameraHal_Module.h.

RGB Monocular Liveness Detection¶

1. Camera requirements¶

At present, the monocular liveness detection works best under the wide dynamic camera. You can choose the USB wide dynamic camera or the MIPI camera + board ISP. Excessive camera noise will also affect the performance of single RGB liveness detection.

index |

requirements |

|---|---|

WDR |

>= 80db |

SNR |

>= 50db |

White balance |

suggest open |

FPS |

>20fps |

Format |

YUV/MJPG |

Resolution |

>= 480p |

Binocular distance |

<= 3cm |

2. Level configuration¶

At present, the RGB liveness detection provides four levels, namely LEVEL_1, LEVSEL_2, LEVEL_3, Please select LEVEL_1 for RGB+NIR liveness detect, select LEVEL_2 for single RGB liveness detect.

3. Usage¶

//liveness detection

float score = dfaceRGBLiveness.liveness_check(frame, bbox);

5. Index¶

There are several standard indicators for liveness detection, as follows:

False attack accept rate: If 0.5%, it means 1000 attack requests, there will be 5 times because the liveness score is lower than the threshold is accepted by mistake.

Pass rate (TAR): If 99%, it means 100 real person requests, there will be 99 passes because the liveness score is higher than the threshold.

Threshold: Above this value, it can be judged as a living body, 0.2~0.5 is recommended

Liveness Detection Selection¶

1. Security rank¶

RGB Liveness(LEVEL_1) + NIR Liveness > RGB Liveness(LEVEL_2) > NIR Liveness

Face Detection And Recognition¶

1. Detection accuracy and performance configuration¶

There are many factors that affect the performance of face detection, mainly related to the working mode of face detection and the minimum face size. The larger the minimum face size setting, the faster the speed, but the face detection accuracy will decrease. We provide two working modes for face detection , Respectively, speed priority mode and precision priority mode, different scenes can be switched, for example, the usual camera detection can use speed priority mode, picture detection (ID card, registered pictures) scenes recommend using precision priority mode to increase the face detection accuracy.

//set minimum face size

dfaceDetect.SetMinSize(80);

//face detect

List<Bbox> box = dfaceDetect.detectionMax(frame);

2. Recognition distance configuration¶

The recognition distance is mainly related to the camera resolution and the minimum face size setting value. We provide two minimum face size settings, namely the minimum face detection size setting and the minimum face size recognition setting. The smaller the value, the farther the detection and recognition distance (note that detecting the smallest face will affect the detection speed, the smaller the value, the slower the speed). The higher the camera resolution, the higher the upper limit of detection and recognition distance.

Camera Resolution |

detect minimum size |

recognize minimum size |

valid distance |

|---|---|---|---|

480P |

100px |

100px |

0.7m |

720P |

120px |

100px |

2.0m |

1080P |

160px |

100px |

5.0m |

Improve recognition accuracy¶

In order to improve recognition accuracye of the production environment, we need to pay attention to the following indicators to find the most suitable balance point.

method |

Disadvantages |

|---|---|

Raise the recognition threshold |

Increase identification confirmation time |

Increase face quality filtering |

Extra compute time |

Improve the quality of face database |

Increase the cost of maintenance database |

Switch to a higher accuracy model |

Extra compute time |

Face feature comparison open code¶

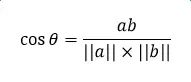

We use cosine similarity algorithm to calculate the similarity of two byte arrays of facial features.

JAVA Version:

public static double sumSquares(float[] data) {

double ans = 0.0;

for (int k = 0; k < data.length; k++) {

ans += data[k] * data[k];

}

return (ans);

}

public static double norm(float[] data) {

return (Math.sqrt(sumSquares(data)));

}

public static double dotProduct(float[] v1, float[] v2) {

double result = 0;

for (int i = 0; i < v1.length; i++) {

result += v1[i] * v2[i];

}

return result;

}

/**

* Comare 2 face feature cosine similarity, cosine = (v1 dot v2)/(||v1|| * ||v2||)

* @param feature1 first face feature byte array

* @param feature2 second face feature byte array

* @note Use Little-Endian default

* @return face feature cosine similarity

*/

public static double similarityByFeature(byte[] feature1, byte[] feature2) {

float[] featureArr1 = new float[feature1.length/4];

for (int x = 0; x < feature1.length; x+=4) {

int accum = 0;

accum= accum|(feature1[x] & 0xff) << 0;

accum= accum|(feature1[x+1] & 0xff) << 8;

accum= accum|(feature1[x+2] & 0xff) << 16;

accum= accum|(feature1[x+3] & 0xff) << 24;

featureArr1[x/4] = Float.intBitsToFloat(accum);

}

float[] featureArr2 = new float[feature2.length/4];

for (int x = 0; x < feature2.length; x+=4) {

int accum = 0;

accum= accum|(feature2[x] & 0xff) << 0;

accum= accum|(feature2[x+1] & 0xff) << 8;

accum= accum|(feature2[x+2] & 0xff) << 16;

accum= accum|(feature2[x+3] & 0xff) << 24;

featureArr2[x/4] = Float.intBitsToFloat(accum);

}

// ||v1||

double feature1_norm = norm(featureArr1);

// ||v2||

double feature2_norm = norm(featureArr2);

// v1 dot v2

double innerProduct = dotProduct(featureArr1, featureArr2);

//cosine = (v1 dot v2)/(||v1|| * ||v2||)

double cosine = innerProduct / (feature1_norm*feature2_norm);

// normalization to 0~1

double normCosine = 0.5 + 0.5*cosine;

return normCosine;

}

C++ Version:

union FloatType {

float FloatNum;

int IntNum;

};

static void convertBytes2Feature(unsigned char* bytes, int length, std::vector<float> &feature) {

int feathreLen = length / 4;

for (int i = 0; i<feathreLen; i++) {

FloatType Number;

Number.IntNum = 0;

Number.IntNum = bytes[4 * i + 3];

Number.IntNum = (Number.IntNum << 8) | bytes[4 * i + 2];

Number.IntNum = (Number.IntNum << 8) | bytes[4 * i + 1];

Number.IntNum = (Number.IntNum << 8) | bytes[4 * i + 0];

feature.push_back(Number.FloatNum);

}

}

//vector norm

static float norm(const vector<float>& vec){

int n = vec.size();

float sum = 0.0;

for (int i = 0; i<n; ++i)

sum += vec[i] * vec[i];

return sqrt(sum);

}

/**

* Compare face feature cosine similarity.

* @param[in] feature1 First face feature

* @param[in] feature2 Second face feature

* @return face feature similarity volume

* @note cosine = (v1 dot v2)/(||v1|| * ||v2||)

*/

static float similarityByFeature(vector<unsigned char>& feature1, vector<unsigned char>& feature2){

std::vector<float> feature_arr1;

std::vector<float> feature_arr2;

convertBytes2Feature(feature1.data(), feature1.size(), feature_arr1);

convertBytes2Feature(feature2.data(), feature2.size(), feature_arr2);

int n = feature_arr1.size();

float tmp = 0.0;

for (int i = 0; i<n; ++i){

tmp += feature_arr1[i] * feature_arr2[i];

}

float cosine = tmp / (norm(feature_arr1)*norm(feature_arr2));

float norm_cosine = 0.5 + 0.5 * cosine;

return norm_cosine;

}